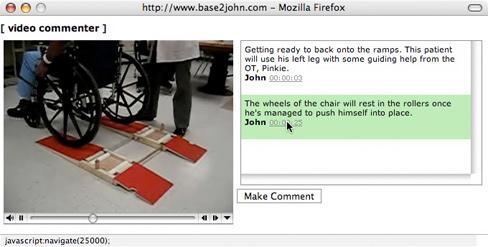

A few years ago, John Schimmel and I worked on an in-time commenting system for video. Specifically we made a WordPress Plugin that interfaced with the built-in WordPress commenting system including user authentication, spam prevention, and so on.

Unfortunately, it no longer works out of the box because we used the QuickTime plugin for video and support for that is waning in the browser space.

Yesterday, I did a quick and dirty update to allow the plugin to use HTML5 video rather than QuickTime. To my delight, it mostly works: Video Commenting Test (try in Safari or Chrome as the video is MP4/h.264).

What still needs to be done is to update the Admin interface to allow multiple video sources and mime type selections for HTML5 video and removing the QuickTime specific portions.

Also, I would love to put an HTML5 canvas on top of this and let people make spacial in-time!

If you are interested, I put it up on GitHub (make sure you use the html5 branch). Pull requests are welcome!